Table of Contents

Introduction

Brief Overview of Containerization

Containerization is a method of virtualizing operating systems that allows developers to run multiple isolated applications on a single system. Unlike traditional virtual machines (VMs), containers share the host system’s kernel, making them lightweight and efficient. This technology is become important in modern software development because it offers a consistent environment for applications, reducing the most import problem that is “it’s working on my machine” 😊.

Introduction to Docker

Docker is a platform that is become equivalent with containerization. Docker provided developers to a simple way to containerize their applications and making them portable across different environments. Docker is become an important part of the DevOps pipeline. It’s simplifying the management of application dependencies and environments.

Understanding Docker

What is Docker?

Docker is an open-source platform designed to automate the deployment of applications inside lightweight, portable containers. A container, in this context, is a standardized unit of software that packages code and its dependencies so the application can run quickly and reliably across different computing environments.

Core Components of Docker

- Docker Engine: The core component that creates, runs, and manages containers. It’s the runtime environment where containers are executed.

- Docker Images: These are read-only templates used to create containers. An image includes everything needed to run a piece of software, including the code, runtime, libraries, environment variables, and configuration files.

- Docker Hub: A cloud-based registry where Docker images are stored and shared. Developers can pull images from Docker Hub or push their own images for others to use.

Docker CLI: The command-line interface used to interact with Docker components, such as creating, running,

What are Containers?

Definition and Purpose

Containers are isolated environments that package an application and its dependencies together. This isolation ensures that the application runs consistently, regardless of where it’s deployed. Containers encapsulate the application’s code, runtime, system tools, libraries, and settings, providing a lightweight and portable runtime environment.

Comparison with Virtual Machines (VMs)

- Isolation Level: Containers share the host OS kernel, while VMs include the OS, leading to heavier isolation in VMs.

- Performance: Containers are more lightweight and efficient than VMs because they don’t require a full OS to run.

- Resource Utilization: Containers use system resources more efficiently, allowing for higher density on the same hardware compared to VMs.

Key Advantages of Containers

- Portability: A containerized application can run anywhere, whether it’s on a developer’s laptop, a test environment, or in production on the cloud.

- Scalability: Containers make it easy to scale applications horizontally, adding more instances of a container to handle increased load.

- Faster Deployment: Containers start up quickly and are lightweight, enabling rapid deployment and updates to applications.

Docker Architecture and Components

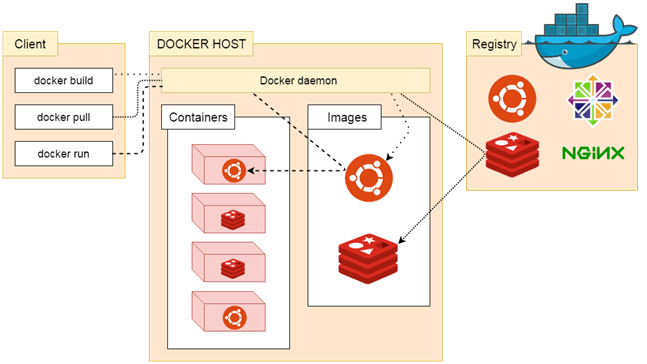

Docker Daemon

The Docker Daemon is a background process that manages Docker objects, such as images, containers, networks, and volumes. It listens for Docker API requests and manages Docker services.

Docker Client

The Docker Client is the command-line tool that allows users to interact with the Docker Daemon. Commands issued via the Docker Client are sent to the Docker Daemon, which carries out the requested actions.

Docker Registry

Docker Registry is a storage system for Docker images. Docker Hub is the default registry where public images are stored, but private registries can also be set up for internal use.

Docker Compose

Docker Compose is a tool that allows developers to define and manage multi-container Docker applications. Using a YAML file, developers can specify the configuration of multiple services that make up an application.

Docker Swarm

Docker Swarm is Docker’s native clustering and orchestration tool, enabling the management of a cluster of Docker engines, turning them into a single virtual Docker engine.

How Docker Works

Container Lifecycle

- Creating and Running Containers: Docker containers are created from Docker images. Once created, they can be run, stopped, restarted, and deleted using Docker CLI commands.

- Example: docker run -d –name mycontainer nginx starts a container named “mycontainer” running the Nginx web server.

- Managing Containers: Containers can be managed using commands to start, stop, and remove them.

- Example: docker stop mycontainer stops the running container.

Networking in Docker

- Docker Networks: Docker uses networks to allow containers to communicate with each other and with the outside world. Docker automatically creates a default bridge network, but custom networks can also be defined.

- Example: docker network create mynetwork creates a custom network for containers.

- Linking Containers: Containers can be linked to work together, often seen in microservices architectures.

- Example: docker run –network=mynetwork –name myapp runs a container named “myapp” on the “mynetwork” network.

Data Management

- Volumes: Volumes are the preferred mechanism for persisting data generated and used by Docker containers. They exist outside the lifecycle of a given container, preserving data when containers are deleted.

- Example: docker run -v /mydata:/data mycontainer mounts a host directory as a data volume.

- Bind Mounts: Bind mounts allow the user to mount a file or directory from the host machine into the container.

- Example: docker run –mount type=bind,source=/path/on/host,destination=/path/in/container binds a host directory to a container directory.

Use Cases and Applications

Microservices Architecture

Docker simplifies deploying microservices by allowing each service to run in its own container. This approach ensures that services are isolated, scalable, and easily deployable.

Continuous Integration/Continuous Deployment (CI/CD)

Docker is integral to modern CI/CD pipelines. It allows for consistent testing environments, where the same Docker images used for development are used in testing and production.

Multi-Cloud Deployments

Docker containers are portable across different cloud providers, making it easier to deploy applications in a multi-cloud environment without worrying about underlying infrastructure differences.

Development Environment Consistency

Docker ensures that development, testing, and production environments are identical, reducing the chances of environment-specific bugs.

Best Practices for Using Docker and Containers

Security Considerations

- Running Containers as Non-Root: For security, containers should run as non-root users to minimize potential damage if compromised.

- Image Vulnerability Scanning: Regularly scan Docker images for vulnerabilities using tools like Clair or Trivy.

Optimizing Docker Images

- Minimize Image Size: Use lightweight base images like alpine to reduce image size and improve performance.

- Layer Caching: Optimize Dockerfile layers to take advantage of Docker’s layer caching mechanism, speeding up build times.

Monitoring and Logging

- Monitoring: Tools like Prometheus and Grafana can be used to monitor container performance.

- Logging: Docker provides built-in logging capabilities, but external log aggregators like ELK Stack can enhance log management.

Resource Management

- Limiting Resources: Set CPU and memory limits on containers to prevent any single container from hogging resources.

Example: docker run –memory=”256m” –cpus=”1.5″ mycontainer limits memory to 256 MB and CPU usage to 1.5 cores.

Challenges and Limitations

Complexity in Orchestration

As the number of containers grows, managing them becomes complex. Tools like Kubernetes are often required to handle large-scale container orchestration.

Networking Overhead

In complex network configurations, Docker’s networking can introduce some performance overhead, especially in multi-host setups.

Stateful Applications

Managing stateful applications in containers can be challenging, particularly with data persistence and storage management across container restarts and migrations.

Future of Docker and Containers

Kubernetes Integration

Kubernetes, an open-source orchestration tool, has become the de facto standard for managing containerized applications at scale. Docker integrates seamlessly with Kubernetes, offering a robust solution for container orchestration.

Serverless Architectures

Containers are increasingly being used in serverless computing models, where they provide a lightweight and portable runtime environment for serverless functions.

Emerging Standards

The Open Container Initiative (OCI) is working towards standardizing container technology, ensuring compatibility and fostering innovation across the container ecosystem.

Conclusion

Recap of Key Points

Docker and containers have transformed software development by making applications more portable, scalable, and efficient. Understanding the basics of Docker and its advanced features is crucial for modern developers.

Final Thoughts

As the industry continues to evolve, mastering Docker and containerization will be increasingly important for developers aiming to build resilient, scalable, and future-ready applications.